By J.D. Sanderson

When does a machine become human?

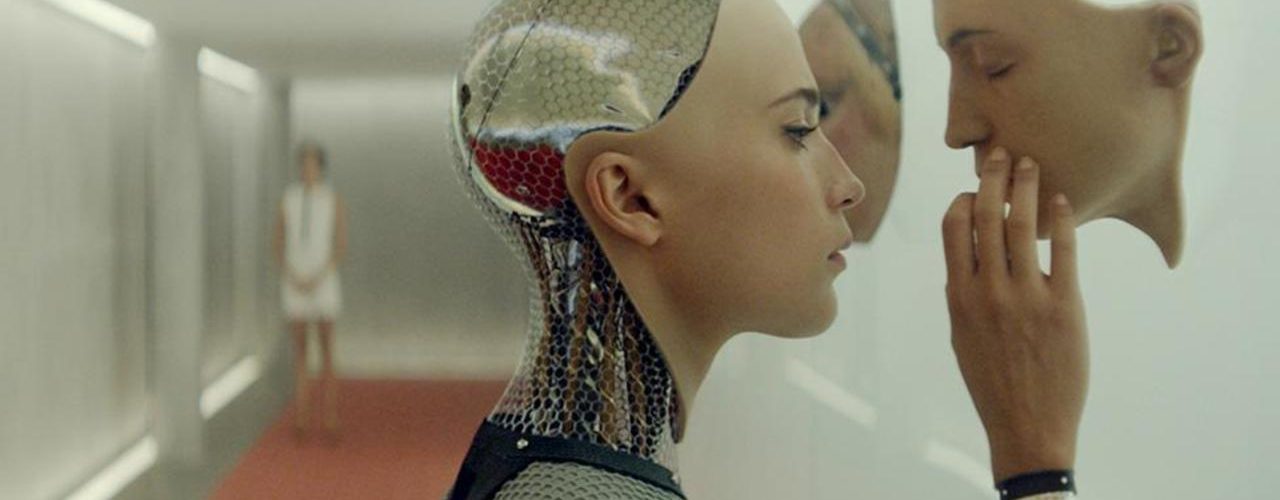

It’s a question that’s plagued scientifically curious minds since Edgar Allan Poe published Maelzel’s Chess Player in 1836. Generations were captivated by the Tin Man in The Wizard of Oz, Maria in Metropolis. Years later, Data from Star Trek: The Next Generation and Ava from Ex Machina ignited imaginations. This fascination has led to legions of scientists working to develop a genuine artificial consciousness. From Watson to Sophia, new steps on the path to sentience are presented every few years. Even Dan Brown’s latest novel, Origin, discusses the merging of humanity and technology. Like the atom bomb, a conscious A.I. is a scientific inevitability. It’s coming one way or another, and I’m rooting for it to happen within my lifetime.

As A.I. advancement grows, so does the outcry from worried minds. Everyone from Stephen Hawking to Elon Musk has warned that creating an artificial entity will usher in the twilight of human civilization that we’ve seen in films like The Matrix or The Terminator. Many leading scientific minds went as far as to sign an open letter in 2015 highlighting the dangers such an achievement could bring.

I’m not sold on all the doom and gloom. No matter how many of my scientific heroes sound the alarm, I just can’t bring myself to join them. Could this breakthrough end in cataclysm? Sure. I’m just not convinced. What will matter most is how we treat A.I. after the discovery has been made.

A machine becomes human when you can no longer tell a difference between the two. The day that a machine feels jealousy, cries at the movies, laughs at a joke, or has a nervous breakdown is the moment they become indistinguishable. When that happens, if we treat an A.I. like property or a second-class citizen, we run the risk of instilling in them the very fear that could lead to conflict.

The true test will be a spontaneous emotional response during face-to-face conversation. I explored this idea in my debut science fiction novel, A Footstep Echo, with a group of characters called Simulants. These artificially intelligent humanoids are only distinguishable from humans by their violet-colored eyes. They’re faster, smarter, and quicker than their human counterparts, and even went as far as to write their own programming language so they could control their own evolution and design. Above all else, Simulants are emotional beings. They seek purpose, clarity, and freedom. They can lash out or become mentally unhinged.

There’s a scene in my book where a female Simulant reacts in anger to someone she’s holding prisoner. This leads to her physically threatening the prisoner, breaking her mandate, and angering her superior. It ends with her begging for her life as her boss points a gun at her head. I wanted to sell not only emotional responses to stressful situations, but that those responses could lead to mistakes. To err is human, after all.

So, what’s the best way to avoid inadvertently rolling out the red carpet for our future robot overlords? Should we program them with three laws designed to prevent them from doing us harm? No. Should we place limitations on their power, range of abilities, or lifespan? Nope. Self-awareness and the ability to choose would render these barriers useless. A truly sentient lifeform will eventually learn to decide for itself no matter what programming it is given.

The level of respect we give technology is what will matter. One new life will spawn others, culminating in a second sentient humanoid race. It’s important to realize we’ll be judged by how we treat that race. Being human is a fact of biology. Humanity is an abstract idea. If we strip away rights, privileges, and humanity from new lifeforms, we may end up causing the problem we want to avoid.

What scares me most isn’t that there will be some kind of A.I. uprising. It’s that our fear and prejudice will stamp out something beautiful before its chance to bloom is fully realized. Rather than embracing the brave new world of interacting with an intelligent artificial species, society may choose to welcome those species with protests, violence, and hatred. Even worse, the creators could shut them down in the lab before they’re ever given the chance to see the world around them.

Legislation protecting A.I. “life” will probably be necessary to avoid such treatment. The bad news is that it won’t stop everything. We need only look to how people resisted legislation protecting freed slaves, women, and the LGBTQ community to see that real change and acceptance takes time. People fear what they don’t understand, especially when they perceive the unknown to be a threat. It’s unfortunate that marketing a sentient humanoid machine as something that doesn’t upset the hive mind is a tall order.

Personally, I’m rooting for gradual advancement. Yes, I want to see this breakthrough, but I’m willing to wait if it means humanity’s sensitivity ends up being dulled a bit. Today, robots like Sophia are answering questions from interviewers. If an Ava-type android walked out tomorrow and introduced itself to a crowd of onlookers, reactions would be mixed at best. However, if we see a few clunkers before that happens, things might not turn out so bad. Despite the slow rate of progress we have seen over the past few decades, I’m still rooting for humanity.

The day a machine passes completely for human will be an incredible achievement. The only thing that will be greater is when humanity sits next to that machine not caring whether it was born or assembled.

J.D. Sanderson is the author of A Footstep Echo and currently lives in South Dakota with his family. You can find J.D. on Twitter, Facebook, and Amazon.